Technical SEO Guide

What Is Technical SEO?

Let’s be honest: SEO can feel a bit magical… and a bit overwhelming. You write content, earn backlinks, tweak your meta titles but there’s a whole behind-the-scenes world that’s easy to overlook. That’s where technical SEO comes in.

So, what exactly is technical SEO?

In simple terms, technical SEO covers everything that helps search engines crawl, understand, and index your website more efficiently. It’s not about keywords or backlinks, it’s about making sure that Google’s bots can access your site without getting lost or confused.

Think of it like this: you might have incredible content, but if your site loads slowly, has broken links, or makes it hard for Google to understand your pages, you’re basically throwing that content into the void. Google won’t rank what it can’t read properly.

That’s where technical SEO saves the day.

Why Is Technical SEO Important?

Imagine walking into a restaurant. The outside looks amazing, but when you step in, there are no tables, the lights flicker, and no one speaks your language. That’s what a technically broken website feels like to Google.

Here’s what can go wrong:

Subscribe to our mailing list to get the new updates!

- Your pages take too long to load? Visitors bounce.

- Your site structure is confusing? Google can’t crawl it efficiently.

- Not mobile-friendly? You’re likely to be pushed down the rankings.

- Got broken links? You lose trust—both from users and search engines.

The bottom line? None of your great content matters if the technical foundation is weak.

Technical SEO is the foundation of your site. Without a strong base, the fanciest content and best backlinks can’t save it. And Google? It’s not in the habit of trusting wobbly websites.

How Is Technical SEO Different From On-Page and Off-Page SEO?

SEO rests on three core pillars:

| SEO Type | Focus Area |

| On-Page SEO | Page content, headings, internal links, keyword usage, media optimization |

| Off-Page SEO | Backlinks, social signals, brand authority |

| Technical SEO | Site speed, crawlability, indexation, structure, HTTPS, structured data |

Picture it like a stage:

- On-Page SEO is the performance – the story, the script, the actors.

- Off-Page SEO is the audience buzz – reviews, chatter, reputation.

- Technical SEO is everything behind the curtain – the lights, the stage rigging, the sound system.

Without the stage being solid and functional, the best actors and reviews won’t matter. Technical SEO ensures that your site works for both humans and machines; fast, clean, and accessible.

How Do Search Engines Crawl and Index Your Website?

What Does Crawling Mean in SEO?

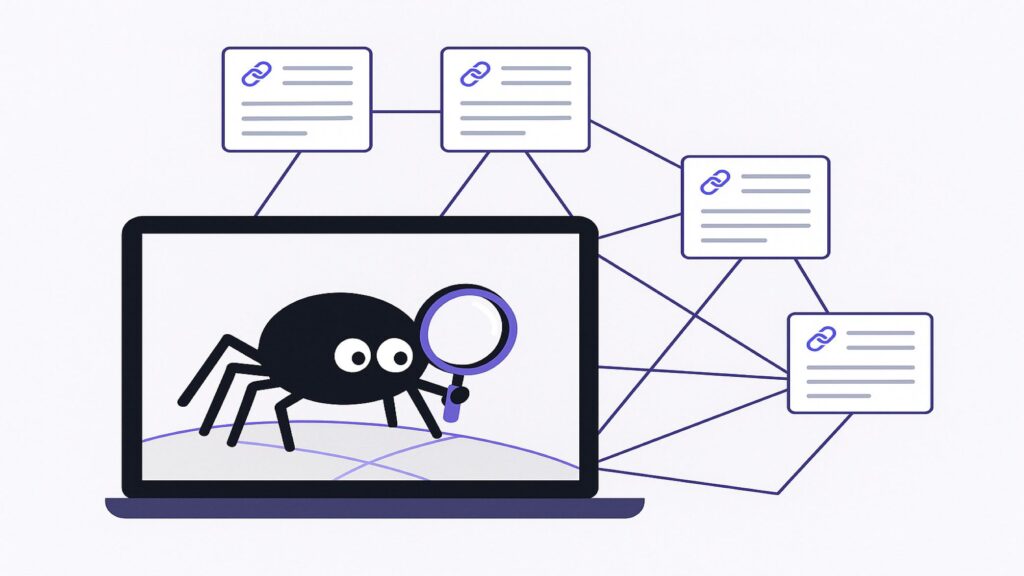

Let’s start with a simple question: How does Google even know your website exists?

The answer is crawling. When you publish a page on the internet, it doesn’t magically appear in Google search results. First, Google (or another search engine) sends out little bots -called crawlers or spiders- to explore the web. Their job? To discover new content and revisit old pages to see if anything has changed.

Imagine these crawlers like super-efficient librarians. They follow links from one page to another, scanning the content and structure of each site. If your site is easy to navigate, they’ll find more pages. If it’s confusing, they might hit a dead end.

And here’s the kicker: if your page isn’t crawled, it can’t be indexed. And if it’s not indexed, it’s not going to show up in search results. At all.

So step one in the SEO journey? Make sure search engines can find your stuff.

What Is Indexing and Why Does It Matter?

After crawling comes indexing and no, it’s not the same thing.

Think of indexing like a giant filing cabinet inside Google’s brain. Once the crawlers have gathered your page, they analyze its content, structure, media, and metadata to decide what the page is about. If it checks all the boxes (i.e., it’s not spammy, broken, or duplicated), it gets stored in Google’s index.

From that point on, your page becomes eligible to appear in search results when someone types in a related query.

But if a page isn’t indexed? It’s invisible. It doesn’t matter how perfect your content is or how relevant your keywords are, Google can’t serve a page it hasn’t filed away.

So the real goal of technical SEO? Make crawling easy and indexing inevitable.

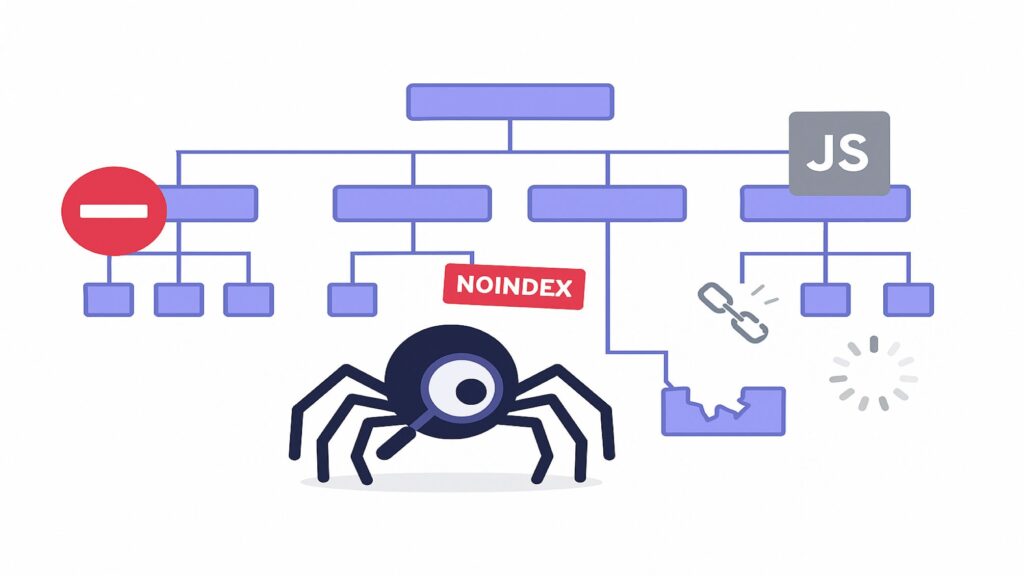

What Blocks Crawling and Indexing?

Even if you’re writing A+ content, there are plenty of ways to accidentally block search engines from seeing it:

- Noindex tags: These tell search engines not to index a page. Useful sometimes, but dangerous if applied by mistake.

- Disallow rules in robots.txt: This file can stop crawlers from accessing certain folders or pages entirely.

- Broken internal links: If your site structure is messy, crawlers may never reach some of your pages.

- Slow loading speeds: Crawlers work on a time and resource budget—slow pages may not get fully crawled.

- JavaScript-heavy content: If your site depends on JS to load key elements, bots might miss them altogether unless it’s properly rendered.

All of these are technical SEO issues. They don’t relate to content quality but they can make or break your ability to rank.

How Can You Help Search Engines Crawl and Index Your Website?

There’s good news, you’re not powerless. In fact, you have several tools and techniques at your disposal to guide search engines through your site:

- Submit an XML Sitemap via Google Search Console. Think of it as a roadmap that tells crawlers, “Here are all the pages I want you to see.”

- Fix broken links and keep your internal linking clear and intentional.

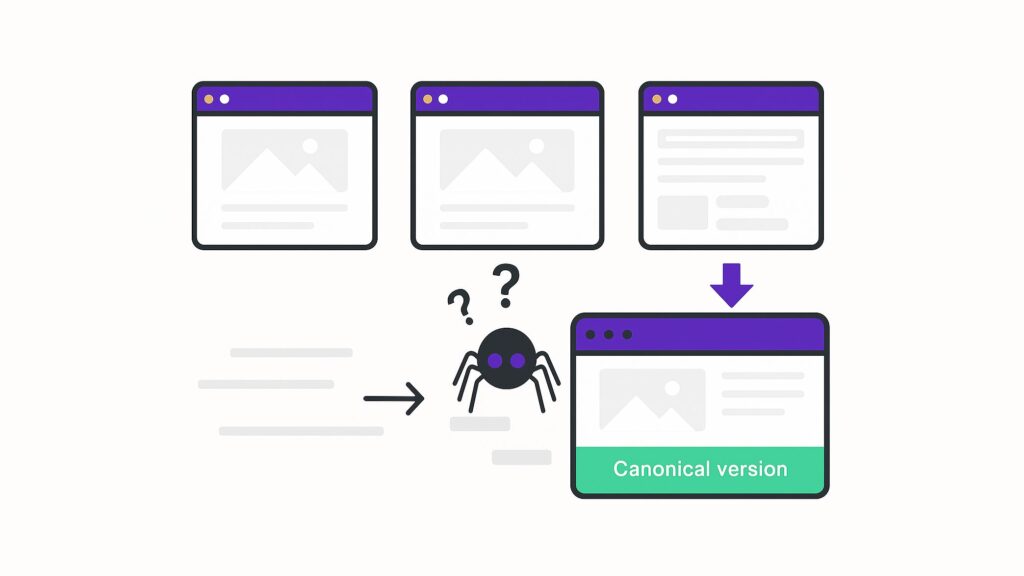

- Use canonical tags to prevent duplicate content confusion.

- Implement structured data (like Schema markup) to help Google understand your page elements.

- Improve your site speed and mobile usability, so bots don’t get stuck.

- Regularly check indexing coverage in Google Search Console to catch red flags early.

In short: make it easy, make it fast, and make it clean.

How Can You Improve Your Technical SEO?

You might be wondering, “Okay, I get what technical SEO is… but what do I actually do to make it better?” Great question.

Here’s the thing: technical SEO isn’t about flashy tricks or quick wins. It’s about building a solid foundation. And like any good foundation, it requires a bit of digging, some honest audits, and regular maintenance. But don’t worry—it’s not as scary (or as “technical”) as it sounds.

Let’s walk through the core areas where you can start making a real difference today.

1. Start With Speed Because No One Likes to Wait

Think about the last time you clicked on a link and waited… and waited… only to give up and hit the back button. That’s exactly what your visitors do when your site drags. And Google? It notices.

Speed is one of those rare things that matter equally to both people and algorithms. You don’t need to chase a perfect score, but you do want to smooth out the basics. Even shaving off a second or two can make a big difference; not just in rankings, but in how your brand feels.

Here’s how to improve speed without going full developer mode:

- Compress your images before uploading. Tools like TinyPNG or Squoosh do wonders.

- Use lazy loading so images only load when they’re about to appear on screen.

- Minify CSS, JavaScript, and HTML, removing unnecessary code can drastically reduce file sizes.

- Enable browser caching so returning visitors don’t have to reload everything from scratch.

- Use a CDN (Content Delivery Network) to serve content faster across different locations.

Tip: Run your site through PageSpeed Insights and aim for a score above 90, but don’t obsess. Even small improvements make a big difference.

2. Make Your Site Mobile-Friendly

This isn’t 2010. Mobile-first indexing is Google’s default now, meaning your site is judged primarily by how it performs on mobile devices, not desktop.

So test your site like you’re a visitor on a crowded bus, one-handedly scrolling through your homepage. Are the fonts readable? Are the buttons tappable? Is the experience intuitive? If anything feels clunky or awkward, it’s time for a cleanup.

Responsive design is non-negotiable now. Think of it as table stakes, not a feature.

3. Improve Crawlability and Internal Linking

Imagine inviting someone to your house, but you’ve hidden the front door, blocked off the hallway, and covered all the signs. That’s what a poorly structured site feels like to Google’s crawlers.

- Use clean, descriptive URLs (e.g., /about-us instead of /page?id=123).

- Create a logical site hierarchy with clear categories and subcategories.

- Add internal links from high-traffic pages to new or underperforming content. Not only does it help visitors explore more of your content, but it also gives search engines clear pathways to follow. You don’t need a sprawling labyrinth of links – just enough to say, “Hey, if you liked this, you might like that too.”

- Keep your navigation consistent across the site. Don’t bury important pages three clicks deep. And don’t forget about the sitemap, it’s like handing Google a map with the best spots circled.

- Avoid orphan pages – every page should be reachable through at least one internal link.

Remember, if a bot can’t find a page, it doesn’t exist in Google’s eyes.

4. Use a Proper Sitemap and Robots.txt

These two files don’t get much spotlight, but they quietly influence how search engines treat your site. Think of your sitemap.xml as your site’s resume—it lists the pages you want search engines to notice. And think of robots.txt as your bouncer—it tells crawlers where they’re not welcome.

Most platforms handle these for you, but it’s still worth checking them every now and then. Especially if you’ve recently added new sections, changed URLs, or redesigned anything major. You don’t want to accidentally slam the door shut on Google’s face.

Best practices:

- Always submit your sitemap through Google Search Console.

- Keep your sitemap updated automatically (most CMSs can do this).

- Don’t block important resources like CSS or JS files unless absolutely necessary.

Use Disallow rules wisely in robots.txt – don’t accidentally lock out pages you want indexed.

5. Fix Duplicate Content Issues

Google doesn’t like seeing the same content in multiple places, it can confuse crawlers and dilute ranking signals. Sometimes you create it on purpose (hello, product variations), and sometimes it sneaks in behind your back (hi, printer-friendly pages). Either way, duplicate content sends mixed signals to search engines, and mixed signals never rank well.

Canonical tags are your friend here. They quietly say, “Hey, I know there are similar versions of this page, but this one is the real deal.” And when something really doesn’t need to be indexed, a simple noindex does the trick.

How to handle it:

- Use canonical tags to tell Google which version of a page is the “main” one.

- Set proper redirects (301s) where needed.

- Regularly audit for duplicate title tags and meta descriptions.

What matters is clarity. You’re not trying to hide anything – you’re just helping Google understand which pages matter most.

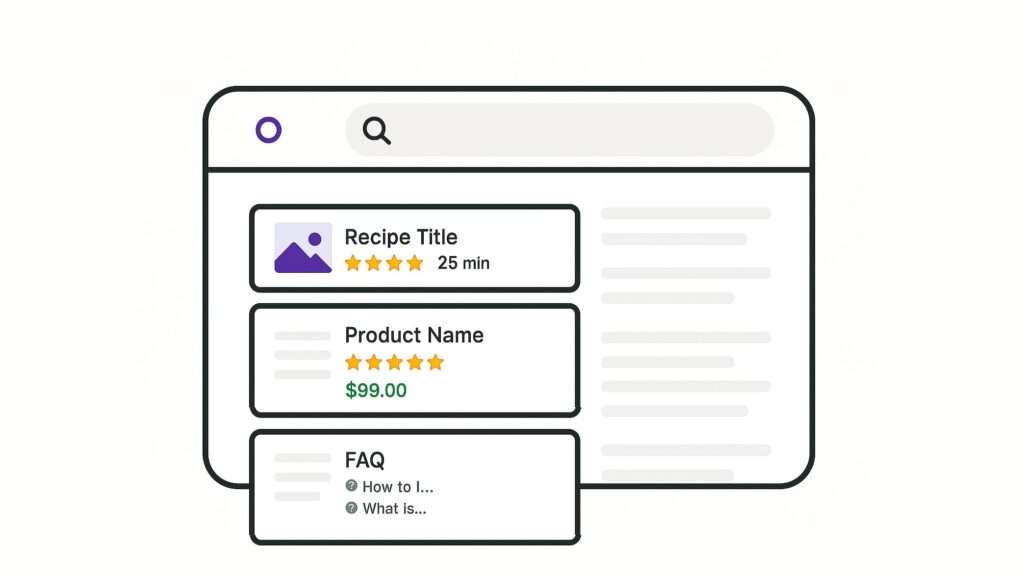

6. Add Structured Data (Schema Markup)

Have you ever seen a recipe in Google search results, complete with star ratings, cook time, and a picture, all before you even clicked? That’s structured data at work. Structured data is like whispering in Google’s ear: “Hey, here’s exactly what this page is about.”

By adding schema markup to your pages, you’re giving search engines context clues. “This is a product,” “This is a review,” “This is an FAQ.” You’re not changing how your page looks to users, but you’re definitely changing how it performs in the SERPs.

It’s not mandatory, but when done right, it can give you a little visual boost that sets you apart

It helps search engines understand your content and sometimes even rewards you with rich results like review stars, FAQs, or recipe cards.

You can add schema for:

- Articles and blog posts

- Products and reviews

- Events

- FAQs

- How-to guides

Use Schema.org as your reference point and test your markup with Google’s Rich Results Test.

7. Regularly Audit and Monitor

You wouldn’t leave the house without brushing your teeth (hopefully). Your site deserves the same regular check-in. Even the most well-optimized site drifts over time. That’s why ongoing audits are essential.

Every month or so, take a few hours to:

- Check Google Search Console for crawl errors, indexing issues, or Core Web Vitals problems

- Use a crawler tool (like Screaming Frog or Sitebulb) to identify broken links, redirect chains, or missing meta tags

- Review your robots.txt and sitemap for accidental blocks

- Make sure all pages still have one -and only one- canonical version

This kind of low-key maintenance goes a long way. You don’t need to obsess. Just stay aware.

How Can You Build a Site Structure That Works for Both Users and Search Engines?

You can have the fastest, most technically sound website in the world…

But if everything feels like it’s scattered in a digital junk drawer, people will bounce and Google won’t stick around, either.

So let’s talk about structure.

Your website’s structure isn’t just a UX thing. It’s not just about pretty menus or aesthetic vibes. It’s the backbone of how your entire site is understood, crawled, and indexed. It shapes how users feel when they’re on your site, and how bots move through it behind the scenes.

And if either of those experiences is messy? You’ll feel it—in your rankings, your bounce rate, and your conversions.

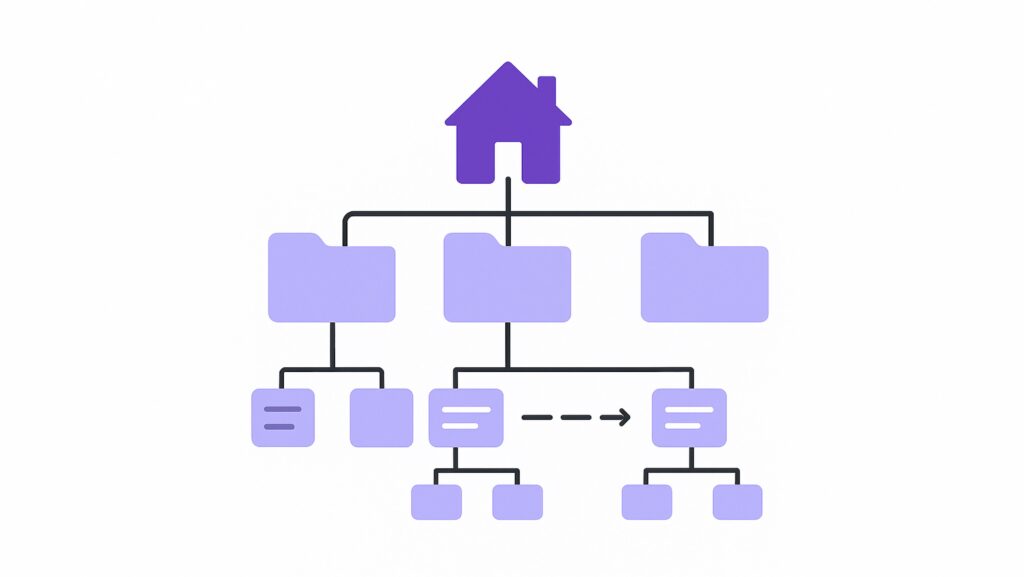

What Is Site Structure in SEO Terms?

Site structure is how your website content is organized, both visually for users and technically for search engines. Think of it like the layout of a grocery store. If cereal is next to car batteries, and dairy is in five different aisles… you’ll give up pretty fast.

In SEO, we aim for hierarchy and clarity.

Most strong structures follow a shape like this:

- Homepage → Main Category → Subcategory or Content Page

This logical flow helps search engines understand which pages are most important, and it helps users find what they’re looking for without friction.

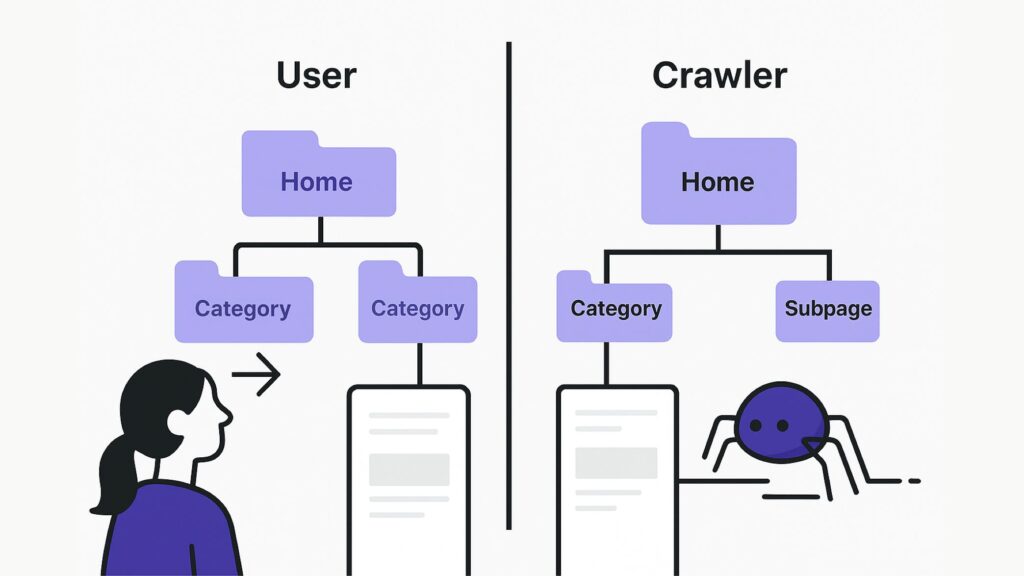

Why Does Navigation Matter for SEO?

Navigation isn’t just about menus at the top of your site. It’s about the experience you create as people move through your pages.

And here’s the twist: your users and Googlebot both “navigate” your site—but they do it differently.

- Users want ease. Clear categories, simple language, and not too many clicks.

- Search engines want structure. Clean URLs, internal links, and no rabbit holes.

If your site has a poor navigation setup, people get frustrated, and crawlers get lost. That’s a lose-lose.

But if your navigation is intuitive, people stay longer, discover more pages, and engage. Google loves that.

How Should You Structure Your Website for SEO?

Here’s where we get practical. If you’re building or auditing a site, here are a few principles to follow not in bullet points, but in mindset.

Start from the top down. Your homepage is command central. Every important category should be no more than one click away from it. These category pages are powerful, they tell Google what your site is about at a high level.

Build meaningful categories. Don’t create a dozen categories just because you can. Instead, group your content in ways that are actually helpful. If you’re running a fashion site, “Dresses” is better than “Things We Like.”

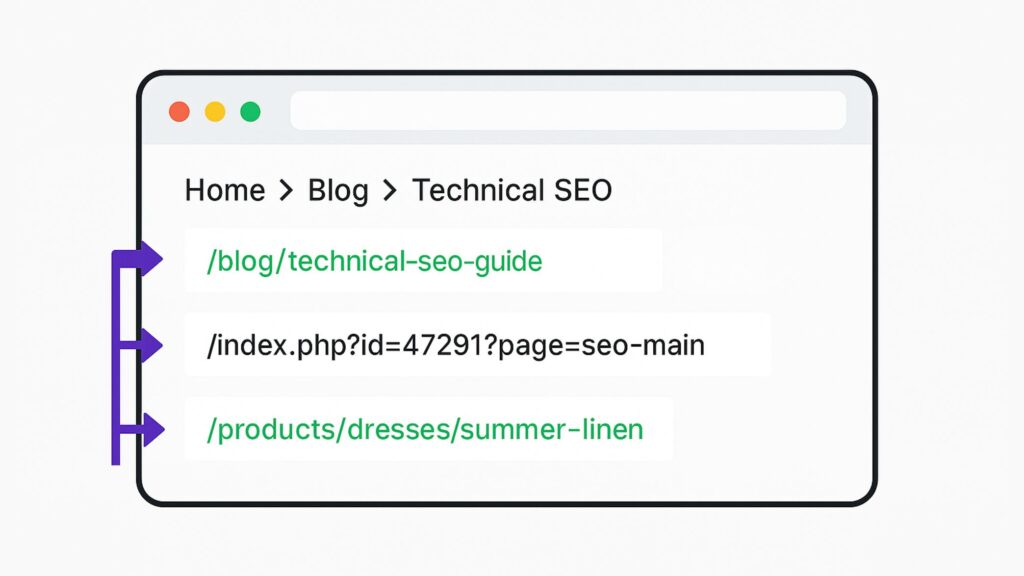

Keep your URL paths clean. Avoid long, messy strings with numbers and symbols. A URL like /blog/technical-seo-guide is far easier to understand than /index.php?id=47291&page=seo-main.

Use breadcrumb navigation. This helps users retrace their steps, and it also reinforces your structure in a format Google loves. It’s a win-win.

Avoid orphan pages. Every page on your site should be reachable via at least one internal link. If it’s not connected, Google might miss it entirely.And finally, don’t bury important pages. If something matters to your business or your users, it shouldn’t be five clicks deep. The “3-click rule” isn’t a hard law, but it’s a good gut check.

The Unsung Hero of Site Architecture, Internal Linking

Internal links are the roads that connect your pages. But they also do so much more than that.

They help distribute authority from your most powerful pages (like your homepage or top blog posts) to pages that need a little love. They help search engines discover new content. And they subtly guide users toward the actions you want them to take.

When you add an internal link, think:

- “Is this helpful to the reader?”

- “Is this guiding the crawler to something important?”

- “Is there context here, or am I just stuffing links in for the sake of it?”

Intentional linking, over time, becomes one of your most powerful SEO tools.

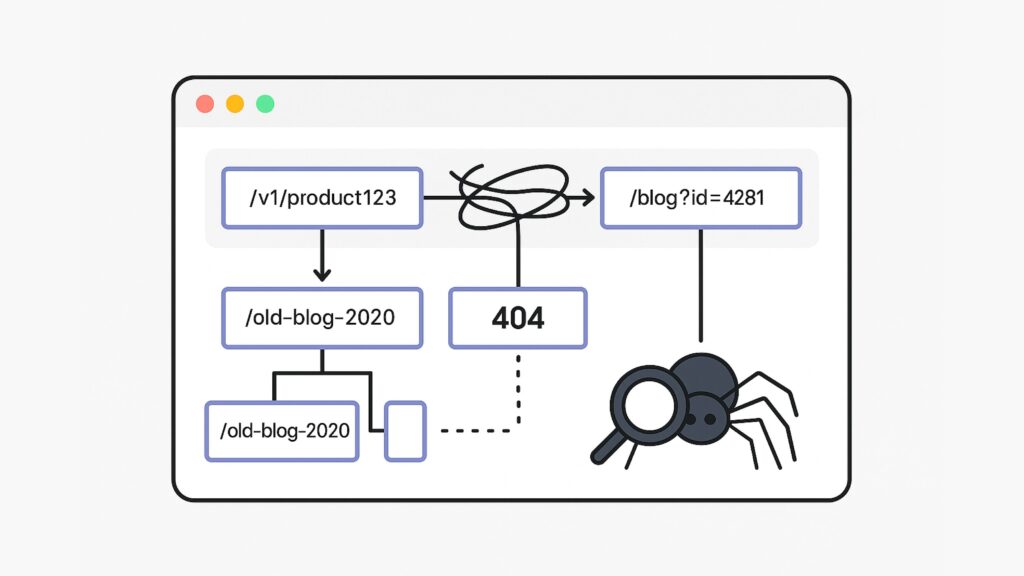

What Happens When You Get Site Structure Wrong?

Let’s be real, most websites don’t start off perfect. Maybe you added a blog as an afterthought. Maybe your product pages live on a subdomain. Maybe your old URLs still show /v1/ from 2018.

The problem is, poor structure accumulates clutter. And clutter confuses Google. It wastes crawl budget, fragments link equity, and makes it harder for your most important content to shine.

But the good news? Site structure is fixable.

You don’t have to rip everything down and rebuild. Start with a content inventory. Group similar pages. Redirect or consolidate duplicates. Clarify your menus. Improve internal linking. Over time, that structure becomes sharper, simpler, and more powerful.

Why Does PageSpeed Matter

You’ve probably felt it yourself: you click a link, the loading bar starts crawling… and before the page even shows up, you’re gone. Back to the search results. Onto the next site. That’s how fragile attention is online.

Now imagine that’s your website.

Page speed isn’t just a UX issue; it’s a conversion killer, a bounce magnet, and yes, an SEO signal. When your site takes too long to load, both your visitors and Google quietly move on.

But what actually slows a site down? And more importantly, how do you fix it without becoming a full-time developer?

Let’s unpack it.

Why PageSpeed Matters for SEO and Users

Google officially made page speed a ranking factor back in 2018, and since then it’s only become more important, especially on mobile. Today, page experience metrics (like Core Web Vitals) play a measurable role in how your site performs in search.

But even if you ignore the algorithms, speed matters for one simple reason: people hate waiting.

A delay of just one second can drop conversions by 7%. By three seconds, nearly half your users might bounce. This isn’t hypothetical, it’s behavioral. Speed equals trust. Slowness feels broken.

And Google knows it.

How Is PageSpeed Measured?

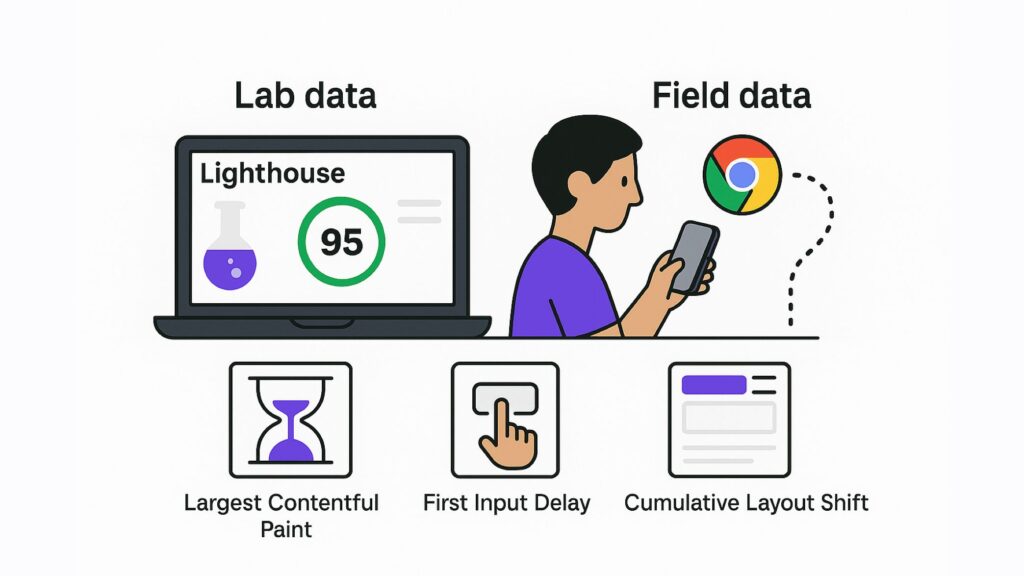

There are two main ways to measure your site’s speed: lab data and field data.

- Lab data is what you see in tools like Google PageSpeed Insights or Lighthouse. It’s a simulated test run in a controlled environment, great for debugging.

Field data comes from real users, collected through the Chrome User Experience Report (CrUX). This is what Google actually uses to assess Core Web Vitals.

The three most important Core Web Vitals are:

- Largest Contentful Paint (LCP): How long it takes the biggest visible element (like a hero image or heading) to load. Under 2.5 seconds is good.

- First Input Delay (FID): How soon your site becomes interactive. Google’s threshold is under 100 milliseconds.

- Cumulative Layout Shift (CLS): How stable your layout is as it loads. Ever try to click a button and it jumps? That’s CLS.

But don’t get lost in the acronyms. The real takeaway is this: faster = better, always.

What Slows Your Site Down?

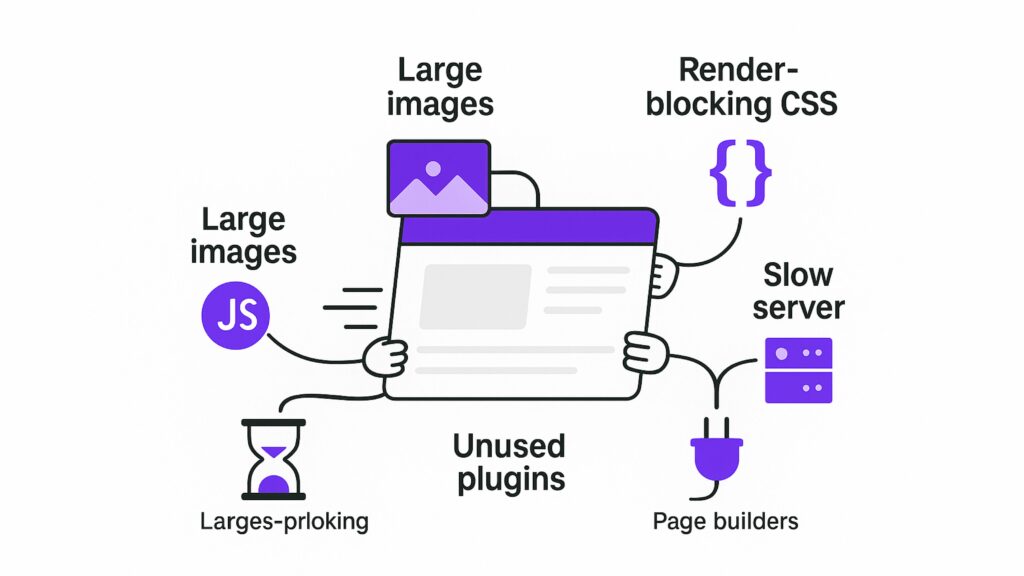

You don’t need to run a full audit to know where most performance problems come from. The usual suspects?

- Massive images that weren’t resized or compressed

- Too many JavaScript files loading before the page even renders

- Render-blocking CSS that prevents anything from showing until it’s done

- Slow or shared hosting dragging your response times down

- Bloated plugins or page builders trying to do too much

Even fonts can slow your site down if they’re loaded inefficiently.

Sometimes, the biggest problem is trying to make everything perfect, loading animations, hero videos, third-party widgets. It adds up fast.

How Do You Actually Make Your Site Faster?

Here’s the part where most SEO guides hit you with a checklist. But let’s keep it conversational, ≈think of these as approaches, not rules.

First, start with your images. Are they way bigger than they need to be? Are you uploading 4000px-wide photos just to display them at 300px? Tools like Squoosh or TinyPNG can shrink image sizes dramatically without losing quality. Use WebP format where possible, it’s smaller and faster.

Next, get lazy. Literally. Lazy loading means your images and videos only load when the user is about to see them. It’s like saving energy until it’s actually needed.

Then, trim the fat. Minify your CSS, JavaScript, and HTML to remove anything unnecessary. Your users don’t care about whitespace or comments in code, they just want your page to load.

Consider a CDN (Content Delivery Network) like Cloudflare. It distributes your content across global servers so users always access a nearby copy, huge for international performance.

And don’t forget caching. Set up browser caching so returning users don’t have to reload your entire site every time they visit. It’s like remembering their face at the door instead of asking for ID again.

Keeping Speed Under Control (Even After a Redesign)

Improving speed isn’t a one-time fix. Websites evolve. New features are added. Someone uploads a 6MB background video. You blink, and your score drops.

So build in habits:

- Test speed monthly (or whenever you launch a new section)

- Stick with lean, fast-loading themes especially on WordPress

- Be cautious with plugins (more isn’t always better)

- Choose a host that prioritizes speed (cheap shared hosting won’t cut it forever)

- Monitor your Core Web Vitals in Google Search Console they’re right there under “Page Experience”

If you stay proactive, your site won’t just pass technical audits it’ll feel better to use.

Extra Technical SEO Tips and Strategies

You’ve nailed the fundamentals. Your site is fast, mobile-friendly, indexable, and structurally sound. But if you want to stand out in a competitive SERP, now is the time to go a step further. These are the technical SEO strategies that move you from good enough to technically excellent.

Let’s unpack each one with more depth and intention.

Noindex and the Hidden Weight of Unnecessary Pages

Not every page on your website should be indexed by search engines. In fact, letting low-value pages get indexed can actively hold your site back. These pages often dilute topical authority, waste crawl budget, and sometimes even compete with your better-performing content.

Tag pages, thin category archives, internal search results, and filtered product listings are common culprits. They often contain repetitive or weak content that offers little value on their own.

Here’s how to clean it up:

- Add a <meta name=”robots” content=”noindex”> tag to pages that don’t offer unique value

- Keep those pages accessible to Google so the noindex tag is seen (don’t block them with robots.txt)

- Make noindex part of your content publishing checklist, especially for autogenerated or archive pages

This one change can dramatically reduce index bloat and help Google focus on what matters.

Mobile Usability Requires More Than Just a Responsive Theme

Your website might look responsive on desktop, but that doesn’t guarantee a good mobile experience. And since Google uses your mobile version for indexing by default, even small mobile issues can hurt your visibility.

Check the following on a real device:

- Is your text readable without pinching or zooming

- Do buttons and links have enough space between them

- Does anything shift or break as the page loads?

- Are menus, banners, and pop-ups usable on small screens?

Google Search Console’s Mobile Usability report can catch some issues, but manual testing reveals more. You don’t need to be pixel-perfect across every phone, but aim for speed, stability, and usability on the most common screen sizes.

Managing Crawl Budget the Smart Way

Crawl budget refers to the number of pages Googlebot is willing to crawl on your site in a given timeframe. If your site is small, this might not be an urgent issue. But once you hit hundreds or thousands of URLs, optimization becomes essential.

Here’s how crawl budget gets wasted:

- Dozens of low-quality pages (like thin tag archives or duplicate product listings)

- Long redirect chains that stall the bot

- Broken links or orphan pages that lead nowhere

- Uncontrolled faceted navigation or filtered URLs with infinite variations

To improve crawl efficiency:

- Use noindex on low-priority pages

- Keep your sitemap updated with only relevant URLs

- Audit your internal linking so crawlers can find your best pages easily

- Consolidate duplicate or overlapping content with canonicals or redirects

The goal is simple. Make every crawl session count.

Using Hreflang to Serve the Right Language and Region

If your site targets multiple countries or languages, hreflang is the tag that makes sure the right users see the right version of a page.

Done well, hreflang improves both SEO and UX. Done poorly, it causes confusion, indexing errors, and missed opportunities.

Here’s what good implementation looks like:

- Every version of a page includes hreflang references to the others, including itself

- The hreflang attributes match the canonical version of each page

- Region codes follow ISO standards (for example, en-GB for UK English)

- Hreflang is either in the <head>, HTTP headers, or the XML sitemap

Be cautious. Many sites add hreflang tags but forget to make them bidirectional. If Page A references Page B, Page B must also reference Page A. Use validation tools to check your setup before relying on it.

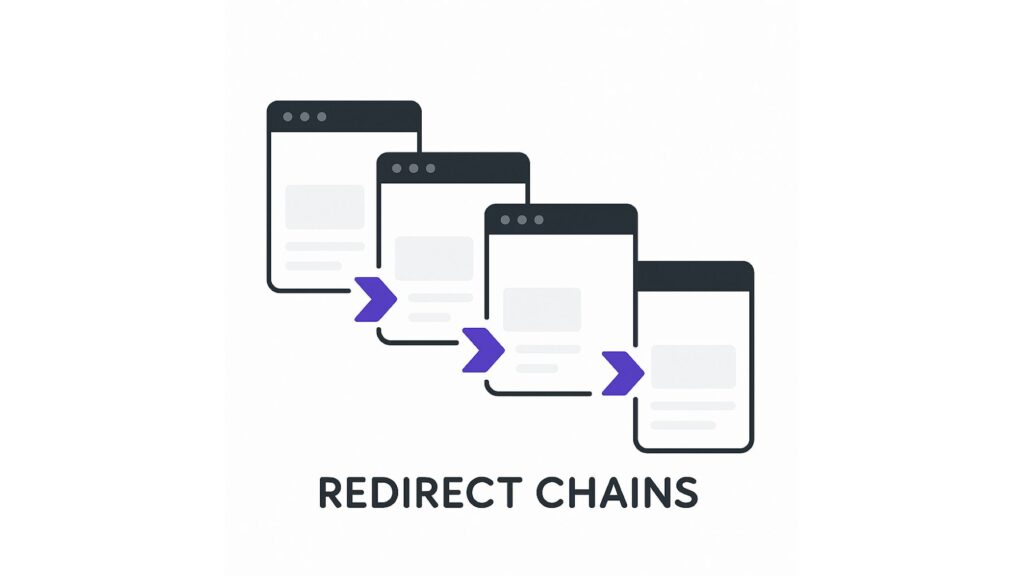

Fixing Redirect Chains Before They Pile Up

Redirects are fine. Redirect chains are not. When a browser or crawler has to jump through multiple URLs to reach a final destination, everything slows down. Authority gets diluted. Load time increases. And Google may eventually stop following the chain altogether.

Here’s how to prevent redirect bloat:

- Keep your redirects to a single hop

- Update old internal links to point directly to the final URL

- Avoid stacking redirects across redesigns or platform changes

Redirects should feel like a clean handoff. If they start feeling like a maze, it’s time to clean them up.

Handling URL Parameters Before They Create Chaos

URL parameters like ?ref=, &color=blue, or &sort=price can be useful for users. But for crawlers, they often mean duplication, crawl waste, and analytics chaos.

Uncontrolled, these variations can generate thousands of unnecessary pages. And unless managed, Google may treat them as separate URLs with overlapping content.

Best practices include:

- Adding canonical tags to parameter-based pages that point to the clean version

- Using noindex if the filtered or sorted version shouldn’t appear in search results

- Managing parameters via Google Search Console if they’re generating crawl issues

- Keeping marketing UTMs out of indexable URLs whenever possible

URL parameters aren’t bad, but they need supervision.

Making Infinite Scroll Crawlable

Infinite scroll feels smooth for users, but it’s a dead-end for bots if implemented poorly. If content only appears as the user scrolls -and that content isn’t available through crawlable URLs- Google won’t see it.

To fix this:

- Create paginated fallback URLs (like /blog?page=2) behind the scenes

- Link to these fallback pages in your HTML, even if hidden from view

- Load additional content in a way that still renders to bots (avoid JS-only injection without SSR)

Better yet, use both infinite scroll and pagination together. You get smooth UX and strong crawlability.

Writing Smarter Sitemaps

Your sitemap isn’t just a list of URLs. It’s a curated invitation to Google, saying, “Here’s what matters on my site.”

Make it count by:

- Including only indexable, high-value URLs

- Updating it automatically when content is added or removed

- Using separate sitemaps for blogs, products, images, or videos if your site is large

- Ensuring lastmod reflects real changes to each page

Monitor how many sitemap URLs are actually indexed via Google Search Console. If the gap is large, something’s broken.

Taking Structured Data to the Next Level

Schema markup helps Google understand what your content means—not just what it says. Most sites stop at the basics, but there’s much more potential if you go deeper.

Advanced use cases include:

- FAQ and How-To schema to enhance blog posts and product guides

- Breadcrumb schema for improved navigation visibility in SERPs

- Organization schema for homepages and About pages

- Product review schema for category-level trust signals

These don’t guarantee ranking boosts, but they increase your search visibility and click-through rates. Tools like Schema.org, Google’s Rich Results Test, or plugins like RankMath can help implement them with little to no code.

Using Log Files to See How Googlebot Behaves

Log files are like security footage for your server. They show exactly what Googlebot crawled, when it crawled it, and how often.

This data can help you:

- Spot valuable pages that aren’t being crawled at all

- Identify overcrawled junk URLs

- Detect crawl spikes that match errors or outages

- Refine your robots.txt and sitemap priorities based on real bot behavior

You’ll need access to your raw server logs and a bit of help from devs or log analysis tools. But if you want to know how Googlebot really interacts with your site, this is the deepest insight you’ll get.

Wanna see how your website perform?

Let's run a comprehensive technical SEO audit for your website and share a compelling SEO strategy to grow your online business.

SEO Audit →